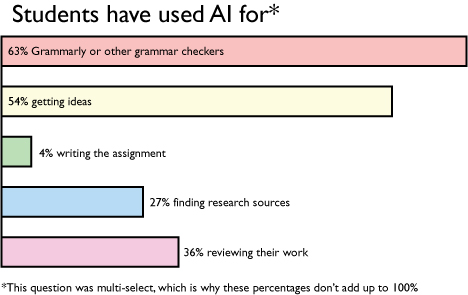

New innovations in artificial intelligence have changed education significantly as tools such as ChatGPT have become popular. Many students have started using AI to complete their assignments, and educators are learning to adjust while embracing the new technology.

The IB department has its own AI policy since the IB organization requires all affiliated schools to have one. It divides common uses of AI into ethical uses, which include quality control and reviewing for tests, and unethical uses, which include using AI to write assignments. This is especially an issue when the student doesn’t actually understand the task. The policy also states that transparency around AI use is paramount.

The IB itself has stated on their website they believe that it isn’t effective to simply shy away from AI given its prevalence and growing role in society. Like NSD’s policy in its Rights and Responsibilities handbook, the IB stressed that AI is a tool and that continuing to think critically is crucial for responsible use.

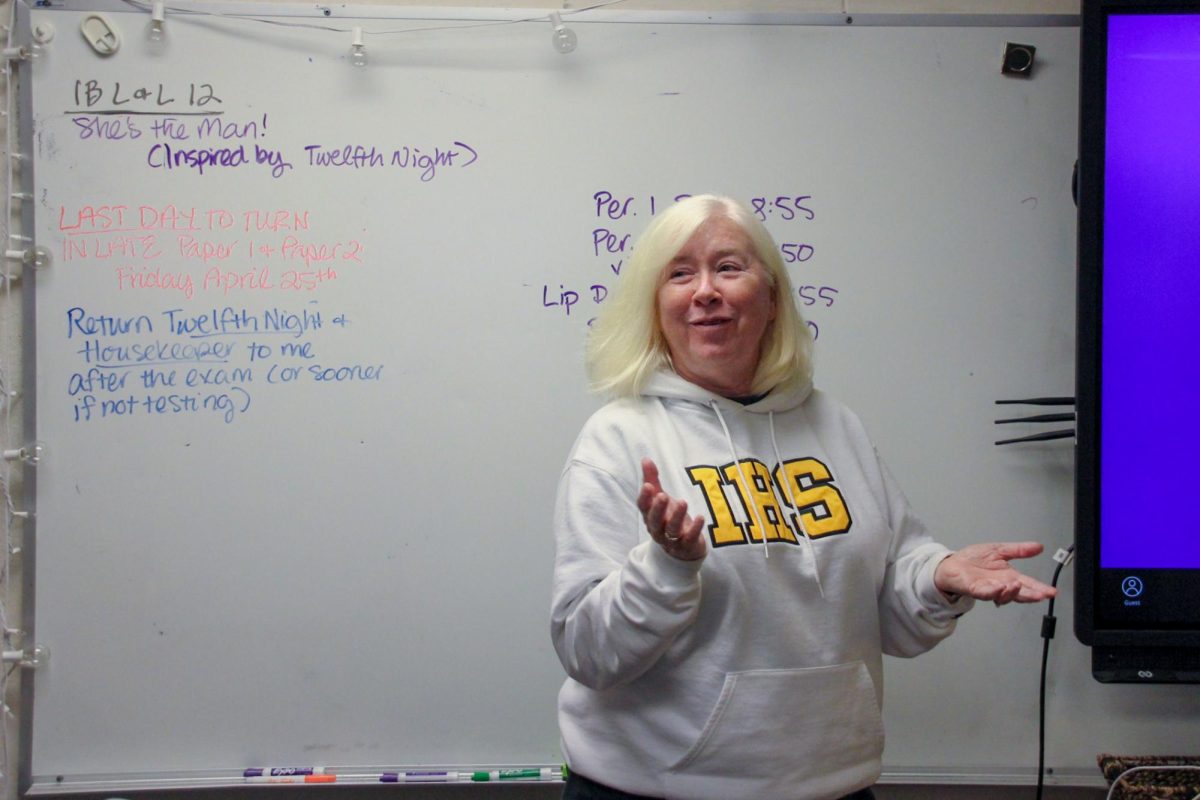

IB coordinator Amy Monaghan (she/her) said she was initially apprehensive about AI use but has become more comfortable with it over time. She compared the introduction of ChatGPT to the introduction of Wikipedia several years ago.

“I remember everybody being really upset about Wikipedia. ‘Oh my gosh, kids are going to be using this to write papers,’ and now we’re very comfortable with saying, ‘This is how you use Wikipedia,’” Monaghan said.

Many teachers include clauses about AI in their syllabuses and have received training on AI use over the past two years. Because the capabilities of AI are constantly changing, Inglemoor doesn’t have an official, unified AI policy. NSD’s Rights and Responsibilities Handbook, however, outlines broad guidelines for AI use, which states that AI should not be a substitute for critical thinking and should only be used for assignments when approved by the teacher.

To detect AI content in assignments, many teachers use an assignment submission tool called TurnItIn, which compares assignments to submissions and online content. IB History of the Americas teacher Timothy Raines (he/him) said it’s generally clear when students use AI.

“It’s pretty obvious to me when it’s AI; you can tell by the language. You can tell it’s written in a way that the student would never write,” Raines said.

Similarly, German teacher Katrin Vince (she/her) said she can easily determine when assignments were completed due to the difference in skill level or writing style.

“If you talk to somebody and be like, ‘So tell me, can you explain a little bit more about what you wrote?’ The student is not going to be able to do that because they haven’t done it,” Vince said. “But if you have done it in writing, and you put a lot of thought into it — so much that it’s amazing — you should be able to explain it very easily when we talk to you.”

Vince also said that teachers can use AI to some extent. Instead of spending an hour creating a fill-in-the-blank worksheet or YouTube video questions for her students to finish in a couple of minutes, she saves time by generating the worksheet with AI, though she always screens the questions to ensure quality. However, she worries that both students and teachers will become too reliant on AI and stop completing their own work.

“Nobody wants to read each other’s work anymore, because it’s too much work, so I kind of worry about it replacing us,” Vince said. “(There’s) a teaching website that creates assignments and assessments and even report cards and comments. You can have AI create all of that for you.”

Raines has also used AI when teaching. For his IB History of the Americas classes, Raines said he generates essays using ChatGPT for his students to assess using the IB rubric, showing that it can be a helpful tool to understand what type of writing meets the rubric.

“Generally speaking, what they realize is that the essay that’s produced by Chat(GPT) does not meet the requirements that IB would have in their rubrics,” Raines said.

Principal Adam Desautels (he/him) said there’s a need for flexibility in dealing with AI because it’s a constantly evolving technology. He said it’s important to keep up with its development and understand its capabilities.

“I just tried to gather as much information as I could,” Desautels said. “I downloaded it, I tried to use it for myself just to see how it would respond, I put in prompts to see what would come back. That was my process to see what it could be used for, in terms of the classroom, so that we could try and get ahead of it as much as we can.”

On the other hand, senior Valentina Desmaret (she/her) said blocking AI on school computers is very restrictive for students who want to use it as a learning tool.

“I understand it’s because we have to be ethic but at the same time, they’re limiting the improvement or the potential that students could have if they have access to the AI opportunities,” Desmaret said.

While blocking AI can cut down on students’ capabilities, senior Amalia Harb (she/her) said there is a fine line where they may go too far when it comes to using AI on assignments. She said that using AI for inspiration is fine, but anything more than that should be unacceptable.

“It can definitely be beneficial, but when it gets to a point where it’s doing your work for you — where it’s actually completing assignments that you need to do, that’s the point where it’s like, okay, you’re not doing anything as a person now, and you’re just relying on AI,” Harb said.

Harb said that even with so many restrictions in the school environment, some students are still able to find ways around it. Given a tool as powerful as AI, many students and educators agree that the ultimate responsibility lies with the user to utilize it ethically.

“It’s super complex, right? It’s all about how it’s used, how it’s cited, those things,” Desautels said. “It really leaves everything — whether it’s correct or not — to the user.”